I’ve spent quite some time in IT doing enterprise integration, and if there’s one truth that consistently holds up, it’s that a solid foundation prevents future disappointment or failure. We’ve all been there: a rush to deliver features on a shaky, unvalidated architecture, leading to months of painful, expensive refactoring down the line.

My experience in retail showed me that, and I was involved in rebuilding an integration platform. In the world of integration, where you’re constantly juggling disparate systems, multiple data formats, and unpredictable volumes, a solid architecture is paramount. Thus, I always try to build the best solution based on experience rather than on what’s written in the literature.

What is funny to me is that when I built the integration platform, I realized I was applying patterns like the Walking Skeleton for architectural validation and the Pipes and Filters pattern for resilient, flexible integration flows.

The Walking Skeleton caught my attention when a fellow architect at my current workplace brought it to my attention. And I realized that this is what I actually did with my team at the retailer. Hence, I should read some literature from time to time!

The Walking Skeleton: Your Architectural First Step

Before you write a line of business logic, you need to prove your stack works from end to end. The Walking Skeleton is precisely that: a minimal, fully functional implementation of your system’s architecture.

It’s not an MVP (Minimum Viable Product), which is a business concept focused on features; the Skeleton is a technical proof-of-concept focused on connectivity.

Why Build the Skeleton First?

- Risk Mitigation: You validate your major components—UI, API Gateway, Backend Services, Database, Message Broker—can communicate and operate correctly before you invest heavily in complex features.

- CI/CD Foundation: By its nature, the Skeleton must run end-to-end. This forces you to set up your CI/CD pipelines early, giving you a working deployment mechanism from day one.

- Team Alignment: A running system is the best documentation. Everyone on the team gets a shared, tangible understanding of how data flows through the architecture.

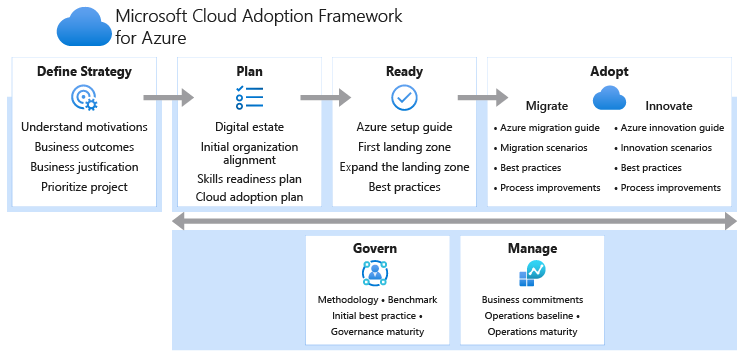

Suppose you’re building an integration platform in the cloud (like with Azure). In that case, the Walking Skeleton confirms your service choices, such as Azure Functions and Logic Apps, which integrate with your storage, networking, and security layers. Guess what I am going to do again in the near future, I hope.

Leveraging Pipes and Filters Within the Skeleton

Now, let’s look at what that “minimal, end-to-end functionality” should look like, especially for data and process flow. The Pipes and Filters pattern is ideally suited for building the first functional slice of your integration Skeleton.

The pattern works by breaking down a complex process into a sequence of independent, reusable processing units (Filters) connected by communication channels (Pipes).

How They Map to Integration:

- Filters = Single Responsibility: Each Filter performs one specific, discrete action on the data stream, such as:

- Schema Validation

- Data Mapping (XML to JSON)

- Business Rule Enrichment

- Auditing/Logging

- Pipes = Decoupled Flow: The Pipes ensure data flows reliably between Filters, typically via a message broker or an orchestration layer.

In a serverless environment (e.g., using Azure Functions for the Filters and Azure Service Bus/Event Grid for the Pipes), this pattern delivers immense value:

- Composability: Need to change a validation rule? You only update one small, isolated Filter. Need a new output format? You add a new mapping Filter at the end of the pipe.

- Resilience: If one Filter fails, the data is typically held in the Pipe (queue/topic), preventing the loss of the entire transaction and allowing for easy retries.

- Observability: Each Filter is a dedicated unit of execution. This makes monitoring, logging, and troubleshooting exact no more “black box” failures.

The Synergy: Building and Expanding

The real power comes from using the pattern within the process of building and expanding your Walking Skeleton:

- Initial Validation (The Skeleton): Select the absolute simplest, non-critical domain (e.g., an Article Data Distribution pipeline, as I have done with my team for retailers). Implement this single, end-to-end flow using the Pipes and Filters pattern. This proves that your architectural blueprint and your chosen integration pattern work together.

- Iterative Expansion: Once the Article Pipe is proven, validating the architectural choice, deployment, monitoring, and scaling, you have a template.

- At the retailer, we subsequently built the integration for the Pricing domain, and by creating a new Pipe that reuses common Filters (e.g., the logging or basic validation Filters).

- Next, we picked another domain by cloning the proven pipeline architecture and swapping in the domain-specific Filters.

You don’t start from scratch; you reapply a proven, validated template across domains. This approach dramatically reduces time-to-market and ensures that every new domain is built on a resilient, transparent, and scalable foundation.

My advice, based on what I know now and my experience, is not to skip the Skeleton. And don’t build a monolith inside it. Start with Pipes and Filters and Skeleton for a future-proof, durable architecture for enterprise integration when rebuilding an integration platform in Azure.

What architectural pattern do you find most useful when kicking off a new integration project? Drop a comment!