The days of prepurchasing a large amount of infrastructure are gone. Instead, in the Cloud, we deal with buying small units of resources at a low cost. As a result, developers have the freedom to provision resources and deploy their apps. They can spend company money at a click of a button or line of code. There is no longer a need to go through any procurement process.

Therefore you could ask the question: Should developers be aware of the running costs of their apps and belonging infrastructure? And also worry about SKU’s, dimensioning, and unattended resources? I would say yes, they should be aware. Depending on requirements, environments (dev, test, acceptance, and production), availability, security, test strategy, and so on, costs will accumulate. Having an eye on the cost from the start will prevent discussion when the bill is too high at the end of the month or lacks justifying of the chosen deployment of Azure resources.

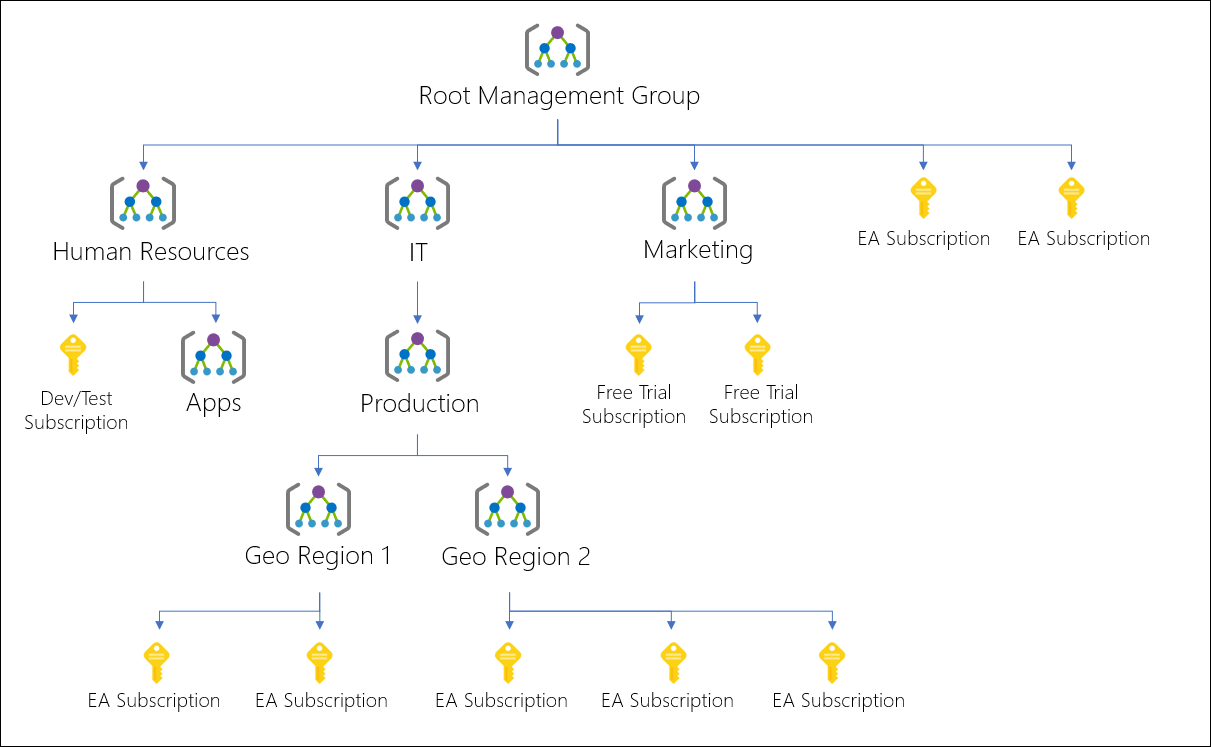

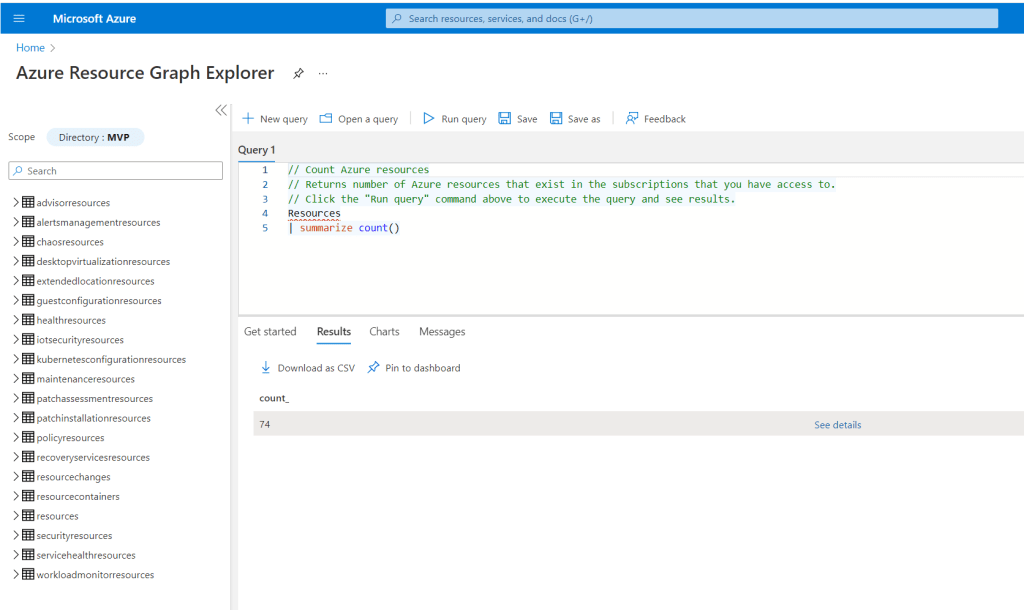

Fortunately, there are services and tools available to help you in the estimation of costs, monitoring, and analysis for cost optimization. Furthermore, you can help identify costs by applying tags to your Azure resources – important when costs of Azure resources in a subscription are shared over departments.

Azure Calculator

Microsoft provides a Cloud Platform called Azure containing over 100 services for its customers. They are charged for most of the services when consuming them. These charges (cost) can be estimated using the so-called ‘Pricing calculator.’

You can search for a product (service) with the pricing calculator and subsequently select it.

Next, a pop window on the right-hand side will appear, and you click on view. Finally, a window will appear with the options for, in this case, Logic Apps. You can select the region where you like to provision your product (service), and depending on hosting, other criteria specify what you like to consume. In addition, you can select what type of support you want and licensing model – and there is also a switch allowing you to see what the dev/test pricing is for the product.

Furthermore, if you want to estimate a solution consisting of multiple products, you can select all of them before specifying the consumption characteristics. The calculator will, in the end, show the accumulated costs for all products.

Other tabs in the calculator showcase sample scenarios to calculate the cost potential savings when already running resources in Azure and FAQs. And lastly, at the bottom, you can click purchasing options for the product(s).

More details of Azure pricing are available on the pricing landing page.

Considerations Cost Calculator

An Azure calculator is a tool for estimating and not actual costs generated by a client when using the products. It depends on the workload, the number of environments, sizing, and support costs (not just from Microsoft itself, yet also the cost of those managing the product from the client-side). Using the tool can be a good starting point to provide the client a feeling of the cost generation of potential workloads that run on the platform. Furthermore, you can also use the tool to perform an overall calculation by including multiple environments, sizing, and support leveraging Excel. In addition, there is also a TCO calculator through the Azure pricing landing page.

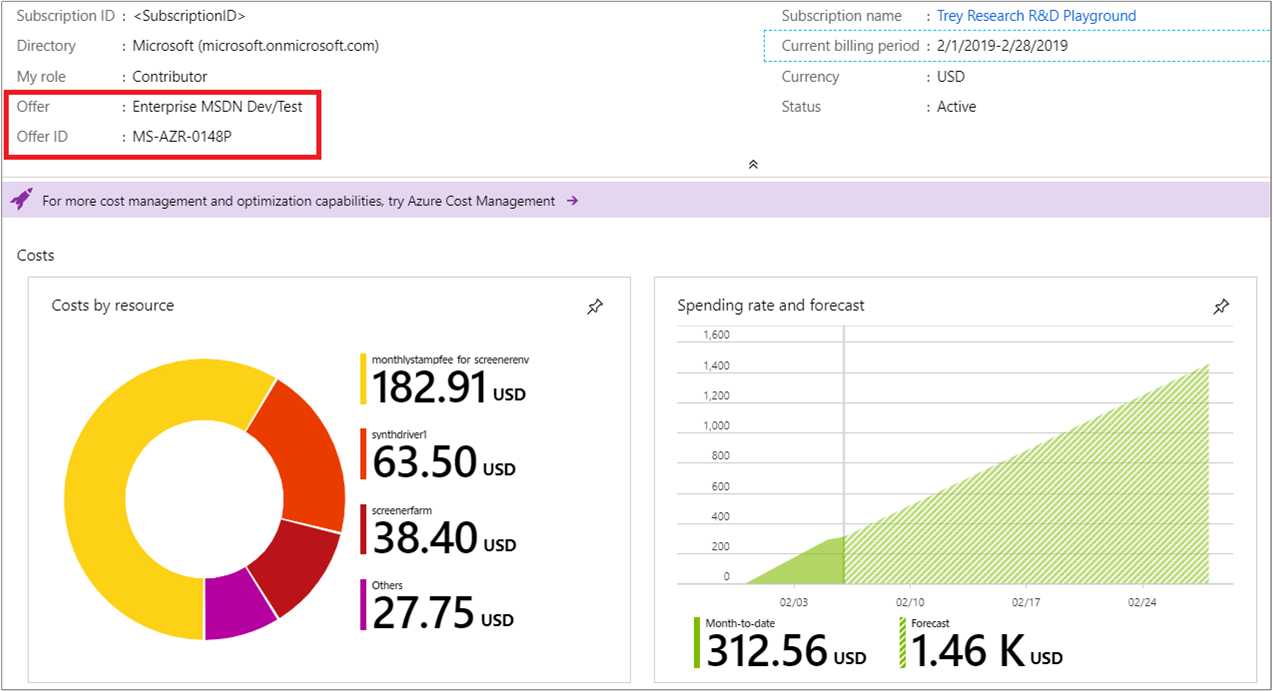

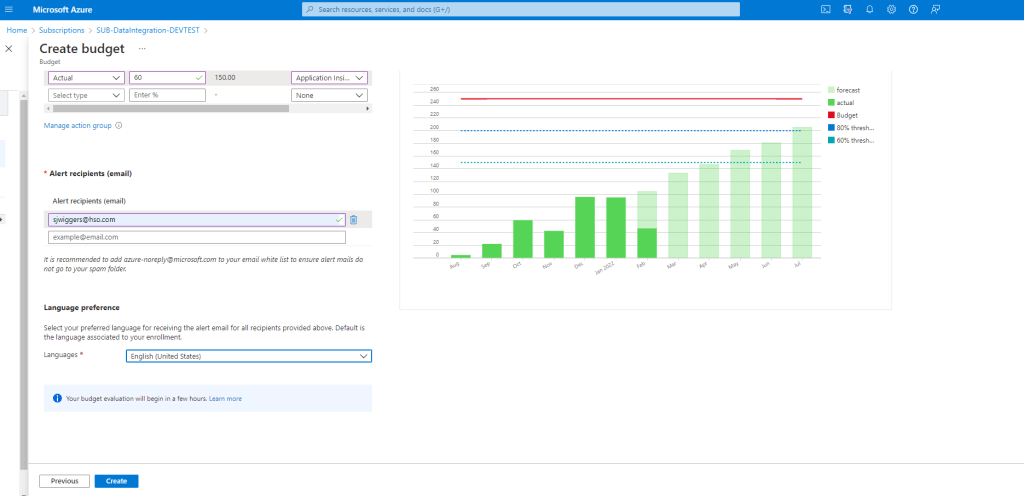

Cost Management

The cost management + billing service and features are available in any subscription in the Azure portal. It will allow you to do administrative tasks around billing, set spending thresholds, and proactively analyze azure cost generation. For example, in the Azure Portal, under Cost Management and Billing, you can find Budgets to create a budget for your costs in your subscription. In the create budget, you can define thresholds on actual and forecasted costs, manage an action group, specify emails (recipients for alerts) and language.

Considerations Cost Management

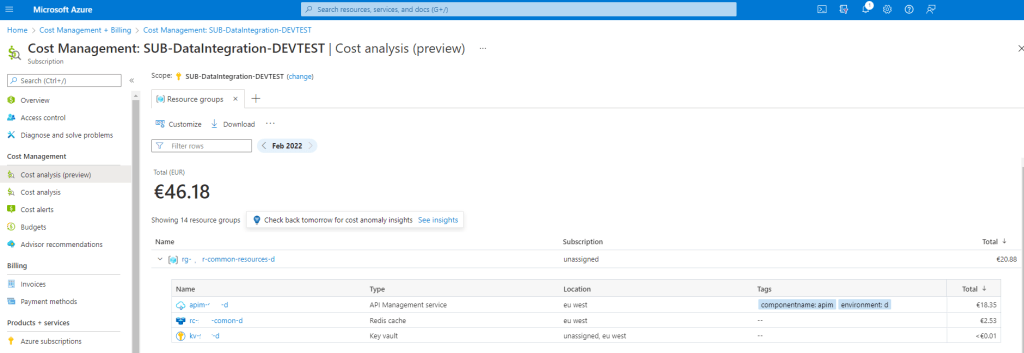

A key aspect regarding cost control is to set up budgets (mentioned earlier) at the beginning once a subscription before workloads land or resources are provisioned to develop cloud solutions. Furthermore, once consumption of Azure resources starts, you can look at recommendations for cost optimizations and Costs Analysis. For instance, the cost analysis (preview) can show the cost per resource group and services.

It is recommended to separate workloads per subscription as per the subscription decision guide. And one of the benefits is splitting out costs and keeping them under control with budgets. And lastly, Azure Advisor can help identify underutilized or unused resources to be optimized or shut down.

Tagging

Tagging Azure resources is a good practice. A tag is a key-value pair and is helpful to identify your resource. You can order your resource with, for instance, a key environment and value dev (development) and a key identifying the department with value marketing. Moreover, you can add various tags (key/values), up to 50. Each tag name (key) is limited to 512 characters and values to 256 characters. More information on limitations is available on the Microsoft docs.

Tagging Considerations

With tags, you can assign helpful information to any resource within your cloud infrastructure – usually information not included in the name of available in the overview of the resource. Tagging is critical for cost management, operations, and management of resources. More details on how to apply them are available in the decision guide. Furthermore, you can enforce tagging through Azure policies – see the Microsoft documentation on policy definitions for tag compliance.

Reporting

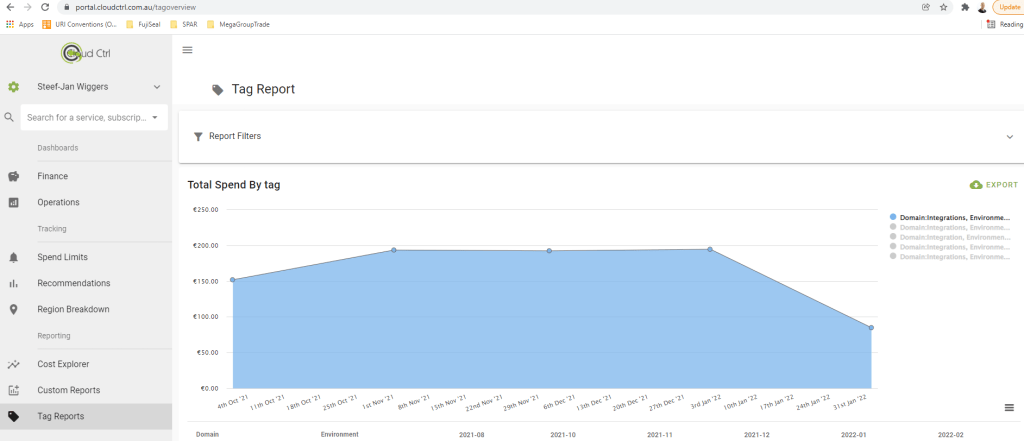

Stakeholders in Azure projects will be interested in cost accumulation for workloads in subscriptions. Therefore, reports of resource consumption in the euro, for example, are required. These reports can be viewed in the Azure Portal under Cost Management and Billing. However, you will need filters in the cost analysis or use the preview functionality to be more specific. Or you can export the data to a storage account and hook it up to PowerBI, or use third-party tooling like CloudCtrl.

And finally, as a developer, you can also leverage the available APIs to get costs and usage data. For example, the Azure Consumption APIs give you programmatic access to cost and usage data for your Azure resources. With the data, you can build reports.

Reports considerations

With costs, reports are essential to realize who the target audience is, what information they are looking for and how to present it. In addition, each active resource consumes the Azure infrastructure inside a data center, leading to cost. And cost should represent value in the end. Hence, reporting is critical for stakeholders in your cloud projects. The analysis of costs is in good hands with the cost analysis capabilities; however, the presentation requirements might differ and sometimes require a custom report by leveraging, for instance, PowerBI or a third-party tool.

Wrap up

In this blog post, we discussed Azure cost and hopefully made it clear that developers should care about cost, and they have tools and services available to make life easier. For example, they can set up cost management infrastructure themselves in their dev/test subscriptions if not already enforced or done by IT. Furthermore, they can make IT and the architect(s) aware of it if it is not in place. In the end, I believe it is a shared responsibility of developers and IT responsible for managing the Azure environments/subscriptions.